Collision response.

Urgh, the night was too short but I had to wake up early otherwise I’ll be a zombie tomorrow Monday.

Since my last post, we are experts in detecting collisions in our super simplistic universe. It is time to react to it.

I can see two things to do:

- We must correct the position of the entity to put it in a legit place, that is a place where it does not collide anything.

- We must transfer energy between the collider and the collidee, including some loss to simulate friction in deformable bodies. This changes velocities.

Position correction.

The simple case.

Since we’re doing science here, I started giving numbers to my figures. See Figure 1. On this figure, the arrows do not represent speeds, they represent translation (movement, displacement) vectors.

- An entity (circle) is at position Black.

- We integrate its motion.

- The motion integrator says that after the time step, the entity should be in the Red position.

- Our collision detector notices that the Red circle intersects the edge of an obstacle (the gray thing). We must respond to this collision.

- We measure how much the entity penetrated the obstacle: we get a penetration vector.

- We add the penetration vector to the Red position to get the Green position. That’s where out entity ends up.

Doing it that way has an advantage: when you hit a wall you don’t get stuck on it but you start sliding. We see that behavior in many games, now you know how it is done.

The correction algorithm is identical for collisions with circles and corners, only the detection changes. Actually, a corner is a circle with a radius of 0, I can use the same code.

Chained collisions.

Now, what if Green is also a bad position? See figure 2. Well, Green becomes the new Red, and we start again at the step number 4. We keep doing that until we stop being in wrong places. Just to make sure we don’t get stuck in an endless loop, I will authorize a maximum of, let’s say, 5 such attempts. After that, I cancel the whole movement and the entity is sent back to the Black position. 5 is an arbitrary number, maybe there is a better one ; I will log something in the console when this number is exceeded so that I can see the situations where things are going wrong. Maybe I will come up with a better algorithm to solve these situations.

Simultaneous collisions.

Let’s look at figure 2 again. The first red circle intersects two edges simultaneously. We reacted by correcting for the closest collision first. Indeed, we hit the right wall before the bottom wall so it makes sense to react to these collisions that way. We ordered collisions by distances.

But maybe we could also correct all these collisions at the same time instead of going back to step 4 (detecting the new closest collision). Since each collision comes with a penetration vector, we just add them all and we get our Green position immediately. Only after correcting for all these collisions we go back to step 4. Indeed, maybe something else that we didn’t notice with our first Red position is in the way now. Look at Figure 3.

Figure 3 shows a small entity with which we did not collide after our integration. We corrected for the two walls and we got the Green position, but now we have to test Green for collisions again otherwise we miss the small entity. GOTO 4.

Velocity correction.

Elastic collision.

We are going to implement elastic collisions. You have an elastic collision when, after the collision, the energy and the momentum of the system are the same than before.

Picture 4 shows a frontal collision, but our collisions won’t always be frontal. See figure 5 for an illustration.

Figure 5: geometry of a collision. Only the normal component of the velocities is modified by the elastic collision.

When two bodies collide, there are two preferred axis for describing the collision: the normal and the tangent axis. If the velocities of the two bodies were collinear to the normal axis, then the collision would be frontal. In any case, only the normal component of the velocities is modified during an elastic collision. So the first thing we must do is decompose the velocities, which reduces our problem from vector to scalar. Then we can use the formulas from the wikipedia page to exchange the energy. Finally we rebuild the vectors.

Energy dissipation, efficiencies.

Unless you are made of rubber or super hard metal, there is very little chance that you bounce back when you hit a wall. We can introduce here two efficiencies: one for the normal component and one for the tangential component. These efficiencies should be between 0 and 1. After applying the formulas for the elastic collision, we multiply our four scalars (two per velocity times two velocities) by these efficiencies. A very high normal efficiency such as 0.95 could simulate the very low loss of energy or a bouncing rubber ball. A lower efficiency such as 0.50 could simulate what happens to you when you run into a wall.

The efficiencies are functions of the material. The two bodies have a priori different materials and their efficiencies are multiplied. If a rubber ball (0.95) bounces pretty well on a stone wall (0.99) with a combined efficiency of 0.95 * 0.99 = 0.94, the same rubber ball does not bounce well at all on you (0.50) since the combined efficiency is 0.99 * 0.50 = 0.49.

It is easy to understand the normal efficiency: it tells you how bouncy the material is. The tangential efficiency is weirder, maybe it can simulate some kind of friction.

The order of the collisions.

When we are computing the collision response for the velocities, we cannot do what we did for the positions. For the position correction, we applied all the corrections for all the detected collisions at once. With velocities, the order of the collisions matters. We run the dissipative elastic collision code on all the detected collisions, one by one, starting with the closest one.

Actually, if we were trying to be super precise, we could imagine that after our collision on the right wall of Figure 2 we lost so much speed that we won’t collide with the other wall. There is no way we implement that: doing so would require to find the exact time of the first collision then restart our integration from there with the corrected velocity. We are not going for something that precise; instead, we will consider that somehow we did collide with all that, and at the very end we’ll see if we have some speed left. Remember, it all happens within a twentieth of a second (the period of our physics engine).

We have another problem: what if we collide two things at the same time? The two collisions are at the same distance, so we should compute an elastic collision of three bodies. It could even be worse, with four or five bodies… That’s many variables and only two equations (conservation of energy and conservation of mass). I could group bodies together and replace them by their center of mass but I declare that “too complicated for now”. I will just apply the collisions one after another, essentially randomly. We are dealing with distances that are way smaller than a pixel here, and we are represented by circles but we are not circles, so let’s not over-design a simplification.

Let’s start coding then!

That should work, right? Something tells me this won’t be done by Monday :).

Collision detection.

In the previous post I explained how I implemented the equations of motion using a RK4 integrator, a Particle class and a couple of Force classes. That was the easy part of the physics engine, for the fun begins now \o/.

Collision detection in games is a big topic. Such a big topic that people have written books about it (I didn’t read them though :p). Fortunately, we do not need to apply these complex models. First, we are in 2D and that simplifies a lot of things. Then, our entities are atomic, not made of fingers and eyeballs that can be sent flying all over the room independently. I won’t be doing this or that. Even what you saw in the Pacman video I posted earlier is beyond my scope since I am not even sure I want to care about rotations.

Boundary boxes.

Everything that moves in the world of Infiniworld is called an Entity. Since they are the only things moving, they are the only things that can collide with anything else. An entity can collide

- with another entity,

- with a tile (some tiles represent walls),

- or with a placeable object.

Placeable objects have not been implemented yet. They can be a chest on the floor, a chair, a boulder, a pillar, a sign…

I am facing a potentially big variety of shapes and I don’t like that at all. If I don’t limit myself, I will still be working on that next year. So this is what I came up with :

In translucent red, the boundaries boxes for calculating collisions: for tiles (clear grass on top of a cliff, bottom left), for entities (Minecraft dude and spider) and for a placeable object (the chest).

I searched the tubes for quite some time in order to find a nice game image but I couldn’t so I made one myself :D. You can see the grid in black, it shows the border of the tiles. It’s grass on the floor, and in the bottom-left corner it’s grass too but it’s on top of a steep cliff so it’s brighter (closer to the sun you know) and you can’t go there. There is a chest for some reason. The chest is not a tile, it’s on a tile. And there are two friends hanging out.

The boundary boxed that I chose are:

- squares for the tiles,

- circles for the entities,

- rectangles or circles for the placeable objects, depending on how they look.

Please note that I do not circle the entire entity sprite! If you see a circle, imagine that in fact it’s a cylinder extending upward; the guy is completely in the cylinder. I should have drawn the circle for the spider a bit lower, and the circle for the guy should be smaller. But you get the idea. Likewise, the sprite of the chest is not entirely within the boundary box, you could approach it from behind, and it would mask your legs. It’s almost 3D!

Some entities will be huge, wider than one tile. That’s cool because it means you can hide from them by running in narrow passages.

The placeable objects cannot be placed freely. They place themselves on a tile according to their design. For example, the chest is centered on the tile. A long table, several tiles long, will have to be made from several placeable objects.

The reason I am limiting the placeable objects that way is because it helps me making sure the game is winnable. If you cannot cross the room because it’s cluttered with stuff, you have a poor game experience. At the moment of checking the winability of the game, I will consider that a tile with a solid placeable object is totally unwalkable even though you could actually squeeze yourself through stuff: better safe than sorry.

So we have to check circles against circles, and circles against square/rectangles.

Circle versus circle.

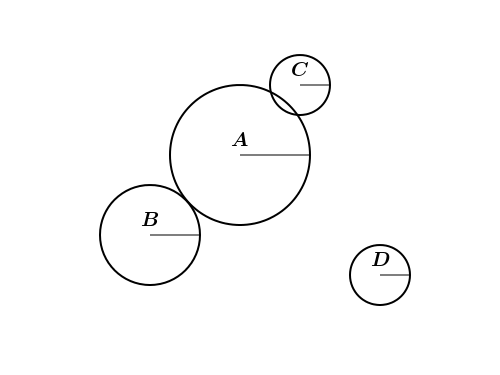

Circles colliding or not. The distance between the centers of the circles A and B is equal to the sum of their radii: they are touching. The distance between the centers of A and C is smaller than the sum of their radii: they are colliding. The distance between the centers of A and D is greater than the sum of their radii, they are neither touching nor colliding.

Testing the collisions of two circles is the easiest of all. All you have to do is to compare the distance between their centers with the sum of their radii. If the distance is greater than the radii, nothing to worry about. If it’s equal we have contact. If it’s smaller we have a collision and that must be solved. I do not really know what to do with contacts yet… For now I’ll consider that a contact is an absence of collision and that’s it.

Circle versus rectangle.

The distance between the center of A and the edge is greater than the radius of A: no collision. Likewise, the distance of the center of B to the corner is greater than the radius of B: no collision either. But for C and D, the distance to the edge or the corner is smaller than the radius: they are colliding.

We have many more tests to do now. A rectangle is made of four edges and four corners. You can collide on any of these. Since you are an entity, your boundary is a circle. When I speak about your distance to something, I measure that distance from the center of the circle. So if your distance to a corner or an edge is smaller than your radius, you are colliding something.

Testing eight features per tile is going to be way too expensive from a computation point of view (remember that the maps have about 16000 tiles and that we run the physics 20 times per second). The first thing to do is to prune the tiles: only test against the neighboring tiles. That’s a quick pre-selection to reduce the tiles to test to a maximum of 9 (maybe more if the entity is bigger than a tile). After that, we can make things easier still.

Ever heard of Voronoi diagrams ? They’re perfect for us here. After all, we can only collide against the closest feature. On the figure above, the gray lines delimit the Voronoi cells outside of the rectangle. All the points of the cell where the circle A lies are closer to the left edge than to any other edge or corner. Likewise, the all the points of cell where B lies are closer to the top-left corner than to any other corner or edge. So, instead of testing 8 features per rectangle, we only test 1. That’s a good thing. Finding the Voronoi cell you are in is a trivial matter, just compare your x and y with the two x and two y of the rectangle.

Another simplification arises: if two solid (wall/cliff) tiles are touching by an edge, then there is no need to test the two corners they have in common. Just grab a pen and some paper if you don’t believe me. If you have to test a corner more than once, you don’t have to test it at all. Sweet.

A priori and a posteriori collision detection.

There is a very well explained paragraph on Wikipedia on the subject of a priori and a posteriori detection. We’ll settle for the easy one: a posteriori. This is enough for games anyway. It is way, way, way, waaaay easier to implement. It has some disadvantages but they’re worth it. First, we lose some precision, but that’s not a problem because we’re a game, not a scientific application, and we just need a result that’s good enough to be believable. Don’t forget I approximated you with a circle. The second disadvantage of the a posteriori detection is that if you are fast enough, you can go through objects. Let’s do the math:

Let’s say that the radius of the circle of our entity is 0.5 m. And let’s say that our entity is close to the edge of a tile but does not touch it yet. If during the time step to come it moves by 55 cm toward the edge, then the center of the circle will be inside the rectangle, missing the edge completely.

The physics engine runs at 20 Hz. That means that the time step is 1/20=0.05 s. Moving 0.5 m in 0.05 s corresponds to a speed of 10 m/s. If an entity with a radius of 50 cm moves faster than 10 m/s, some collisions can go undetected. The smaller the entity, the smaller the critical speed.

You may wonder why I cannot detect that the center of the entity is inside a forbidden tile. Well, I can detect that, but what do I do then ? Shall I push the entity outside the closest edge ? What if behind that edge is another forbidden tile ? What if that edge is on the wrong side of the wall and you just quantum-tunnelled yourself through ? Messy. It is wise to keep speeds reasonable.

However, 10 m/s is still not very fast. The game screen you have seen on screenshots is 13 meters wide. Crossing the whole screen in one second is fast, but not ridiculous. I can imagine some nasty fireballs traveling at that speed. How can we have good collision detections with fast objects ?

It’s easy: we just have to rerun the RK4 integrator with a smaller time step. If at the first run the entity moved by more than its radius, then the movement is canceled and instead we run the integration with a time step divided by 2, or 3, or 4, depending of the number of radii you moved. We run it 2, 3 or 4 times to compensate. We test for collision after each and we stop at the first collision we find. It’s very fortunate that we have chosen to integrate with RK4 because this integrator gives the same results for a wide range of time steps.

Tomorrow…

I’ll write about my plans for the collision response: what to do when entities are bumping into stuff.

It’s almost 2am, my eyelids are violently colliding. I go crash in bed. Bye !

Physics engine – integration, wrap up.

I spent quite some time in my previous article writing about the problem of integrating the equations of motions. I looked at three models: Euler, Verlet and RK4. I did not spend much time explaining them because I provided the reader with links to detailed articles about them. After reading this literature, I decided to give RK4 a try.

Download Infiniworld Version 0.0.4.a with RK4 integrator.

Copy-pasting the RK4 integrator.

This was much easier and faster than I anticipated. The RK4 integrator itself did not need any work as it is a well known problem and has well known solutions. I just had to copy paste twenty lines of code from the article Fourth order Runge-Kutta numerical integration, by Doswa (not sure it’s the name of the author, could also be the name of the site). That took me a couple of minutes, hop, done!

Defining particles.

After that, I needed to feed the integrator with data to integrate. I created a new class named Particle. You can see it in the new module physics.py. A Particle represents a point in space with a mass. It has a position and a velocity in that space. Finally, it contains a set of the forces that are acting on it. A particle does not have a shape or a volume/area; I will introduce these elements later in another class when they will be needed for detecting and responding to collisions.

From the set of forces, and from its mass, the Particle is able to compute its own acceleration at a given moment in time. That’s the famous .

Calculating forces.

The forces can be anything really. I created two types of forces: a ConstantForce and a KineticFrictionForce. Forces in this framework are actually functions of several parameters. The KineticFrictionForce which simulates friction and slows down Particle has the expression : the faster you go, the stronger the friction. If you give a negative value to the friction coefficient

, your particle will slow down. The ConstantForce is simpler, it does not use any of the parameters and always return the same thing when you compute it. That is used for making your entity walk for example.

All that takes 130 lines of code including comments and documentation. Physics engine are easy!

Running the physics.

From the EntityModel I removed the position information and put a Particle instead. The EntityModel declares two forces: the walk force and the friction force. When the player presses or releases the movement keys, the walk force is modified. For now, the friction force is never touched, although it will later when the Entity walks on ice.

The last step is to actually run these physics computations. This is done at the AreaModel level. The AreaModel is a self-contained space with all its landscapes and entities, so it has all the information it needs to calculate trajectories and resolve collisions. Then, I just ask the main game loop to post a RunPhysicsEvent every so often.

That’s it. Very straightforward, it works immediately. You’ve got to like this Model-View-Controller pattern, it really makes our lives easier.

Future improvements.

Our implementation is not perfect though. There are no collision detections at all, that’s obvious. But there are other things that can be improved:

- We should introduce a speed threshold: if the speed of a particle is under that threshold then it is considered null. We are not doing that yet, and as a result, many EntityMovedEvent’s are posted even if the entity is moving at the speed of a picometer per century. The kinetic friction force slows particles down but never stops them completely.

- It is time to have a better time loop to: we do not need, and therefore we do not want, to update the physics 60 times per second. We chose RK4 because this algorithm is good with bigger time steps. We should have the physics running at something like 20 Hz. It is commonly said among game developers that in order for the player to feel that its order are instantaneously taken into account, the response to its action should come within one hundred milliseconds. At 20 Hz, we are twice faster than that so we are on the safe side. Running the physics more often is a waste of CPU.

Edited on Monday 15 aug 2011:

Oops, there was a LOL bug in v0.0.4 so I released a patched version: v0.0.4.a. I updated the link at the top of this post. The bug was that units were moving together, all of them following your keypresses. I forgot to tell them to only listen to the orders containing their identification number :p. Fixed now!

We’ve got a physics engine to build.

Would you like some science with your game?

Not too much science now, since we are focusing today on physics only. And within physics, on mechanics only. Of the classical kind only. With a focus on dynamics only. And within this, of rigid bodies only. And all that in 2D only. This is a very tiny bit of science so we should have it implemented and tested by Monday, right? Right… -_-

Classical dynamics describes and predicts the motion of bodies under the influence of forces. If we model the legs of your in-game character by a ‘walk force’, then you can change the amplitude and direction of this force by pressing the WASD keys and see your character respond to it. If you’ve had a look at the code pushed on GitHub you might have noticed that the MoveCommand event has a force parameter. It is properly generated by the PygameController, but it is stupidly used because there is no physics engine:

class EntityModel(......):

...

def moveTo(self, pos)

"""Set the position of the entity."""

self._pos.icopy(pos)

self.post(events.EntityMovedEvent(self.entity_id, self._pos))

def onMoveEntityRequest(self, event):

if event.entity_id == self.entity_id:

self.moveTo(self._pos + event.force)

So what we are doing here is , which is as far from the Newton equations of motion as you can get while still kinda move in the right direction.

Introducing the time step.

If we try to copy-paste the equations of motion into our code we hit a wall: physics is continuous, but our simulation is limited to using a time step. The time step is the in the equation above. In real life, that time step is tiny, so tiny we can say it is infinitely tiny and we can use calculus to solve the equations of motion exactly, at least in simple cases.

But a game is not simple: there are many bodies moving around at the same time, many forces applied on them at the same time, plus all the collisions that can occur. Solving the equations exactly is out of the question so we have to satisfy ourselves with an approximation that we can compute with numerical analysis.

The smaller our time step, the better the approximation. There are at least two limits to that statement:

- The precision of the floating point calculations is not infinite. A tiny time step can lead to differences in position or velocity that can hardly be represented (if at all) by a 64-bits float. Then your simulation explodes.

- The smaller the time step, the more computations we need to make. If you divide the time step by 10, you have to run your physics engine 10 times more to get to the same time. There is a limit to the number of computations that our computers can do in one second and if we use small time steps then our simulation is going to run late. If it takes you 0.02 seconds to compute the equations with a time step of 0.01 you’ll never catch up and the game will look super slow.

Euler integration.

What do the equations of motion look like when we discretize them with a time step ? Well, there are a bunch of possibilities. They are all equivalent with an infinitesimal time step but they won’t be equivalent for us:

# Possible implementation: position += velocity * dt velocity += acceleration * dt # Another implementation: velocity += acceleration * dt position += velocity * dt # Yet another one: position += velocity * dt + 0.5 * acceleration * dt * dt velocity += acceleration * dt

These are all variations of what many people call “Euler integration”. I don’t know why it’s called after Mr Euler since anyone who starts writing his/her first physics engine comes up with one of these. They just feel very straightforward and natural.

Euler integration works decently in some cases. However, it depends a lot on the choice of the time step. Indeed, with that integrator, the acceleration and the velocity are considered constant during the whole time step. That does not make sense: if the acceleration is constant (and not null), then the velocity CANNOT be constant during the time step. That’s the very definition of accelerating. Different time steps will give very different results and you are forced to use a small time step in order to have the best predictions you can. Which is bad, because small time steps force you to compute more.

Verlet and Runge-Kutta integrators.

Fortunately there are other integrations methods around. They are more complex, less straightforward, but not more complicated. For example, Minecraft uses a Verlet integrator. Another popular integrator is Runge-Kutta. There is a bit of a holly war on teh tubes about which one is better and it’s a bit hard to choose. So let’s apply science, shall we?

- Google scholar for “verlet integration” yields 16300 articles.

- Google scholar for “runge kutta integration” yields 78200 articles.

Therefore Runge-Kutta is better than Verlet :D.

Both Verlet and RK come in several flavors. However, from what I’ve seen around the tubes, what people often mean by “Verlet” is Position-Verlet, and “Runge-Kutta” is most of the time referring to Runge-Kutta-4.

You can have a look at the following articles:

- Verlet Explained: A Simple Time-Corrected Verlet Integration Method, by Jonathan “lonesock” Dummer.

- Runge-Kutta (RK4) integration for game physics, by Kai.

The idea behind position-Verlet is to not use a velocity vector at all. Instead, the velocity is implicit: that’s the distance between the last two positions divided by the last time step. This has the enormous advantage of keeping velocity and position in sync. The algorithm is also very light, it’s almost as light as Euler from a computational point of view. Verlet also lets you rewind a trajectory; that time-reversal symmetry is a nifty thing that has its uses in particle physics but I can’t think of a use-case in a game. Also Verlet is good at conserving the energy of the system, which is cool for serious simulations but we don’t care about it in games where external forces (those damn players keep wanting to move their characters) apply all the time.

The idea behind RK4 is to sample the velocities and accelerations at different times within the time step and make use of Newton series for doing its magic. It’s way less easy to grasp, and there are many more computations involved.

Yeah, Verlet is cool. However, I find it a bit weird. Indeed, how do you start it? You need at least two positions to begin with. Of course you can always say that they are equal, that’s easy, but then all your objects are created at rest which may not be what you want. Perhaps it’s enough: the arrow is at rest in the bow and the force of the string is applied only once when the arrow is fired. You’ll have to tweak the force using your time step to ensure that the arrow achieves the same speed regardless of the time step. Another possibility is to use Euler to define the second point. The example of the bow and arrow also illustrates the lack of impulses. An impulse is an instantaneous change of the velocity. Bouncing on a wall creates an impulse: your velocity is mirrored on the wall. With Verlet, just changing the position is not enough to simulate the impulse because your body won’t bounce in the right direction ; instead you have to create a one-time pseudo force that takes the time step into account. Messy.

On the other hand, RK4 lets me do my physics the way I am used to: I have both my position and my velocity. RK4 is very resistant to a change in time step. So even though RK4 is heavier from a computation point of view, I can call it less often. I choose RK4. It’s not even that hard to implement : Integration basics, by Glenn Fiedler on gafferongames.com.

Once I have implemented the integrator, I will have to start the serious business: collisions.

Edit: One hour later…

Wow, that went pretty well. I spent more time writing the post than actually implementing the thing. The version v0.0.4 is pushed on Github, you can pull or and download it already. See my previous post to know how.

Now, I’ll write something about how I implemented it. So little code has changed ! Have a look:

https://github.com/Niriel/Infiniworld/commit/4ae2d4125899fe738fc4421697e79c954ffbeb4b

My first slime farm !

Woohoo, finally I have slimes ! Not many, for sure, but enough to start thinking about crafting pistons. But slimes are so cute, I feel bad punching them to use them as goo…

Edit, a bit later that evening…

Whoa, even more!

Oh I want to cuddle them all! But I killed them for a total of 16 slime balls. What am I going to do with 16 sticky pistons?

Also I noticed that the mouth of my slime-building is one pixel off. I’ll correct that!

Tile-based landscape frenzy!

On the First day, Niriel created the Entities. And the Entities were floating in the empty void. And Niriel got bored and said “Let there be tiles all over the place”. And there were tiles, and the Entities could walk on the tiles, and everybody was pretty much happy, except Entity number 3 who’s always complaining.

Two entities walking around in an area. Notice that the area is centered on one entity (the one controlled by the player at that time). The color of the tiles correspond to the nature of the floor (rock, dirt, grass, sand, shallow water, deep water).

Download.

- Go there : https://github.com/Niriel/Infiniworld,

- click on the big gray “Downloads” button (right of the page),

- choose “v0.0.3”.

Play.

Many many keys !

- Enter/Return: create a new entity.

- ; and ' (semicolon and quote): take control the previous/next entity

- \ (backslash): create a new area. But it’s useless in this version since the areas created that way don’t have tiles.

- [ and ] (left and right square brackets): move to the previous or the next area. Note that the game starts in the area None, which means that no area is displayed. You must press [ or ] to start seeing something.

- . and / (period and slash): teleport the selected entity to the previous or the next area.

- W, A, S and D: move the selected entity. Note that diagonal movements are allowed, and in that case you are not perfectly lined up with the tiles: you moved by square root of two in x and y.

- Esc: quit. You can also click on the “close” button of the game window.

The controls are not awesome yet. They’re hard-coded for a US keyboard, and not even US international: the quote key doesn’t work for me because it waits for another key after that in order to put an accent on it. But hey, that’s a start.

The little new details.

- The window has a title now. I think there may be a bug in my version of Ubuntu preventing me to set the title after creating the window (it only changes the name in the task bar). So I set the title before creating the window and hop, it works.

- FPS counter. Since the game loop authorizes a max of 60 FPS, that’s what I get. Actually the picture shows 59 FPS, it jitters between 60 and 59. Maybe because of the inaccuracy of time.sleep?

The big things.

There are two major modifications to the code:

- The world can have several areas.

- Areas have tiles.

When you run this demo, a world is generated. It contains three areas. Each area contains 16384 tiles (128 by 128). I chose this relatively high number to challenge myself and make sure I could render the area fast enough. If I couldn’t, I would give up on pygame and python. Fortunately I am still in my 60 FPS budget which makes me very happy. My first two implementations crashed me down to 5 FPS:

- The first implementation was the straight-forward brute force: “Ask pygame to display everything, pygame is smart enough to realize it has nothing to do 95 % of the time, right ?”. Actually it wasn’t that bad on small maps, as this method was faster than my second implementation.

- The second implementation just added a line to the first one with a little test in it: “For each tile, check if it intersects the view, and if it does then draw it”. The result was horrendous since I was processing 16k tiles. Looks like pygame.Rect.colliderect() is not super fast since I got a better performance without checking for smaller maps.

- I threw that away. Since I know in advance the min and max world coordinates of my View, I just have to look for the tiles that are within these coordinates. And it’s fast, and scales well. I could have done that in the first place but it’s a bit more code to write and maybe the other methods would have been okay.

Python does not have two-dimensional arrays so implementing the TileMap was not straightforward. The first idea that comes to mind is to use a list of lists. I didn’t like it because:

- it is a bit hard to guarantee that all the lists have the same length;

- it’s a mess to resize, you have to extend or shrink and shift every list;

- I don’t like to write my coordinates between brackets like tiles[x][y];

- I cannot have negative indices ! Negative indices will probably be very nice to have when the world is procedurally generated, as I do not want to impose arbitrary barriers on two sides of my map.

And then I realized we’re in the marvelous world of Python and that we do not actually need rigid two-dimensional arrays when we have dictionaries and hashable tuples! So a tile map is stored in a dictionary: keys are (x, y) tuples. It is fast, and has none of the drawbacks listed above. Sold !

The TileModel object is very simple, it just contains a nature for the floor, and a height for said floor. If the height is 0 it means you could walk on it. If it’s 1, it means it’s raised, like a cliff, and you can’t go. I should have named it “elevation” instead of height.It is not a 3D game, and I must resist the temptation of allowing the player to stand on high tiles. That will be for the next game. Also, tiles don’t block the movements of the entities yet, since there is no physics engine; the entities are even free to run out of the map.

Next step: Physics !

Stay tuned to learn how I include string theory into the physics engine!

The first screenshot of Infiniworld!

Welcome back, fellow web traveler. Here is, as I promised you in my previous post, the very first screenshot of Infiniworld.

Game features:

- Spawn your very own blocky creatures with a single key stroke (Enter/Return) !

- Take control of your creatures and tell them where to go (WASD keys to move) !

- A stunningly fluid 60 FPS refresh rate on any recent machine !

- Save your battery life: the game sleeps when it has nothing to do !

- Spy on yourself by logging everything you do into a file, and learn about what’s going on under the hood !

Game does not feature:

- Physics engine.

- Any kind of landscape.

- Boobies and explosions (Actually they are in game, but only allegorically, just use your imagination).

- Things to do.

Download and play this first interactive version of Infiniworld !

GitHub link: https://github.com/Niriel/Infiniworld/tree/v0.0.2

If you are a git user:

git clone git@github.com:Niriel/Infiniworld.git

If you just want a zip:

- Go there : https://github.com/Niriel/Infiniworld/

- Click on the big “Downloads” button on the right of the screen.

- A window appears. Under “Download Packages”, click on v0.0.2.

- That’s it, you have the zip !

Run the game.

Enter the src directory and type “python solo.py”.

Implementation.

How does it all work ? you ask. Here is how:

- Model classes: WorldModel, EntityModel.

- View classes: PygameView, AreaView, EntityView.

- Controller classes: PygameController, PlayerController, GameLoopController.

Models.

The world is extremely simple for now. There is no landscape, no map, no tiles. It contains nothing but some entities. I call “entity” anything that exists in the game world and can move: creatures, fireballs…

Entities are represented by an EntityModel class and have a position stored as a two-dimensional vector (see geometry.Vector). Each EntityModel has a unique entity_id. Every event regarding a given entity will carry that entity_id. Today, the role of the EntityModel is to change its position when it is asked to do so. Since there is no landscape and no physics engine, it always accept any order it receives. That means that any MoveEntityRequest results in a EntityMovedEvent.

For now, the only responsibilities of WorldModel are to create and destroy EntityModel instances, and keep a list of them.

Views.

The PygameView is the root of everything that is displayed on the screen. It opens the window and draws everything that needs to be drawn when it receives a RenderFrameEvent.

The AreaView displays a part of the world: landscape, entities. There is no landscape so it just displays entities for now. Each time AreaView receives an EntityCreatedEvent, it instantiates an EntityView object for representing it.

EntityView objects listen to the EntityMovedEvents to remain in sync with the EntityModel objects.

These views uses pygame.sprite for showing themselves on the screen. The sprites of the EntityViews objects are blitted onto the sprite of the AreaView object, which is blitted onto the display by PygameView. On the screenshot, the display is black, the AreaView is gray and the EntityViews are white.

Controllers.

The PygameController translates the pygame events (which are SDL events) into Infiniworld events. For example, it translates the fact that Escape key is pressed into a QuitEvent.

The PlayerController contains an entity_id: the entity being controlled by the player. When the PlayerController receives a PlayerMovedEvent from the PygameController, it responds by posting a MoveEntityRequest with the proper entity_id.

The last controller is the GameLoopController. The current implementation is pretty naive and simple. It posts a ProcessInputsEvent and a RenderFrameEvent 60 times per second. ProcessInputEvent wakes up the PygameController and RenderFrameEvent wakes up the PygameView. Note that the GameLoopController does not use any pygame function, so it does not call pygame.time.clock for running at 60 FPS. Instead, it uses the time module from the standard library. The game loop stops when the GameLoopController receives a QuitEvent.

What next ?

The goal of this demo was to have something interactive as quickly as I could. It works: I can create entities and move them around. But it’s not pretty: the entities are teleported, it is not fluid at all. It would be nice to have a physics engine. However, a physics engine should manage collisions, and I do not have much to collide against for now. I need a landscape. So I think that the next step is to implement tiles, maps, areas, etc..

The WorldModel will hold many AreaModel instances in memory: dungeons floors, cities, overworld, etc.. The EntityModel needs to be extended to accomodate the fact that there are now several areas. The AreaView should show one area only. Entities should be able to move from one area to another. Lots of work to do !

Step 2: interactivity.

In the previous article I explained how I implemented the event management. Now, our software bricks can talk to each other. Let’s get them to talk ! Well… let’s get them, first. We need bricks. The good ones.

Too often I started similar projects, focusing on an aspect such as the procedural landscape generation. It worked ! But I got stuck. I ended up with an ASCII dump of something representing a world, but I couldn’t do anything with it. There was no game built to explore that world. This time I want to start making the game interactive in the first place: we need to be able to explore before having something to explore.

I chose pygame for managing the input and the output. The input being the player’s fingers on a keyboard/mouse/joypad, and output being the screen and loud speakers reaching the player’s eyes and ears. Because I apply some kind of Model-View-Controller (I always say “some kind” because everyone has his own MVC), I will wrap the input in a Controller, and the output in a View.

We will have a PygameController and a PygameView class. I don’t want to use anything related to pygame in the Model classes, to make sure that when I decide to use a different rendering engine I only have to rewrite the input and output without touching the game logic at all. There will probably many other pygame-related views, probably one view per character to be displayed, for example. These views will be managed by the main PygameView. It would be wise to group everything pygame-related in one single package so that it can be replaced. Other views, like a console view for the server, would be kept in another package.

And, finally, we’ll need a game loop. I’ll go for the simplest game loop ever, but in a near future I shall write a whole entry on the subject.

Let’s get to work ! Very soon, the first screenshot ! I bet you can’t wait :).

The event management is in place.

Hello hello !

As promised I worked on what I called “Event Management”, the bare bones of my lousy implementation of the Model-View-Controller pattern. It’s uploaded on GitHub :

https://github.com/Niriel/Infiniworld/tree/v0.0.1

The important module is evtman.py, “evtman” standing for “Event Management”. It contains three classes:

- Event

- Listener

- EventManager

The classes Event and Listener are abstract, and are meant to be subclassed. The Event class looks a bit complicated, but that’s because it automatizes things in order to make its subclasses extremely easy to define. For example :

>>> class CharacterMovedEvent(Event):

... name = "Character Moved Event"

... attributes = ('character_id', 'position_from', 'position_to')

...

>>> event = CharacterMovedEvent('Bunny', (0, 0), (32, 0))

>>> print event.character_id

Bunny

>>> print event.position_to

(32, 0)

>>> print repr(event)

CharacterMovedEvent(character_id='Bunny', position_from=(0, 0), position_to=(32, 0))

>>> print str(event)

Character Moved Event

character_id = 'Bunny'

position_from = (0, 0)

position_to = (32, 0)

See ? Very easy. Now that I think of it, the name attribute should go away because it’s pretty useless; I put it there to have a clean human-readable name but the class name is enough. I made sure that repr would not lead to insanely long lines by capping at 50 characters per attribute. Very useful when events carry a complete dungeon map in a huge list/dictionary/tuple/whatever.

It is also very easy to subclass Listeners:

class CharacterView(Listener):

def __init__(self, character_id):

self._character_id = character_id

self._x = self._y = 0

def onCharacterMovedEvent(self, event):

if event.character_id == self._character_id:

# 32 pixels per meter, and the display starts at

# the bottom while my Y axis goes up.

self._x = event.position_to[0] * 32

self._y = 480 - event.position_to[1] * 32

That’s it, you have your View. It should be a pygame Sprite, the zoom level (32) and the window size (480) should not be hardcoded here, but that’s not more complicated than that.

And to use it is very simple:

def example():

class CharacterMovedEvent(Event):

attributes = ('character_id', 'position_from', 'position_to')

class CharacterView(Listener):

def __init__(self, character_id):

self._character_id = character_id

self._x = self._y = 0

def __str__(self):

return "%s: pos = (%i, %i)" % (self._character_id,

self._x, self._y)

def onCharacterMovedEvent(self, event):

if event.character_id == self._character_id:

self._x = event.position_to[0] * 32

self._y = 480 - event.position_to[1] * 32

#

bunny_view = CharacterView('bunny')

hamster_view = CharacterView('hamster')

event_manager = EventManager()

event_manager.register(bunny_view)

event_manager.register(hamster_view)

#

event = CharacterMovedEvent('bunny', (0, 0), (1, 2))

event_manager.post(event)

event_manager.pump()

#

print bunny_view

print hamster_view

And the result is:

bunny: pos = (32, 416) hamster: pos = (0, 0)

There is one thing missing, though: some Listeners may want to post events. For example, the CharacterModel is a Listener which should be able to post the CharacterMovedEvent. This is achieved by giving the event manager to the listener. Add this to the previous code:

class CharacterModel(Listener):

def __init__(self, event_manager, character_id):

Listener.__init__(self)

self._event_manager = event_manager

self._character_id = character_id

self._x = self._y = 0

def moveTo(self, new_x, new_y):

old_x = self._x

old_y = self._y

self._x = new_x

self._y = new_y

event = CharacterMovedEvent(self._character_id, (old_x, old_y), (new_x, new_y))

self._event_manager.post(event)

hamster_model = CharacterModel(event_manager, 'hamster')

event_manager.register(hamster_model)

hamster_model.moveTo(3, 3)

event_manager.pump()

print hamster

Result:

hamster: pos = (96, 384)

In this example, the model doesn’t listen to anything, but in a real situation it would.

That’s all there is to it ! It just works.

Just don’t forget to unregister the Listeners you don’t use any longer:

event_manager.unregister(hamster_view)

Sure the event_manager keeps only Weak References to the Listeners, so they automatically disappear from its lists. But if they disappear right in the middle of an iteration, that may explode. If you see such an explosion, it means you forgot to properly unregister.

A last word: you can post as many events as you want. They will all be processed, in the order you posted them, when you call the pump method of the event manager. Some event handlers will post events, even register new Listener. That is perfectly okay, the event manager is happy with that, the pump will go on until the event queue is empty.

EDIT:

- I got rid of the ‘name’ variable of events. It was 100 % useless.

- I introduced a SingleListener class, which is for Listeners that are interested in ONE event manager only. And to be fair, that’s the case most of the time.

Who controls the controllers?

The Model-View-Controller pattern is quite a wonderful thing. I had some troubles accepting it because I was born at a time when the most powerful computer in the world had 8 Mb of RAM and a CPU beating at 80 MHz. I still feel a squeeze in my heart when I write an if statement inside a for loop, so I found it hard to accept replacing function calls with messages broadcast all over the place to components that don’t care. To me, it’s like taking all the axones away from a nervous system and ask the hormones to replace them in their job: a tremendous waste of memory and CPU. But memory and CPU are cheap now, and my ambitions are relatively modest from a performance point of view. So I gave in and am now a happy man.

I won’t spend much time describing it because others have done it quite nicely before me. I really urge you to read the following articles if you don’t really know what it’s about.

The idea is to separate the

- game logic –or Models– (physics engine, position of the characters, tiles in the map)

- from the way it is presented –by Views– (sprites on the screen, debugging console, a bit of network code that sends the info to the client who is the one doing the actual rendering)

- and from the way it is controlled –by Controllers– (keyboard, mouse, clock, network sending info from a client or a server).

This makes developing our game a bit like playing with Lego bricks: adding or removing a brick does not interfere with anything. Models Views and Controllers send Events to an Event Manager which takes care of forwarding them to other Models Views and Controllers.

So who controls the Controllers ? The Event Manager does. It also manages the Views and the Models.

For example, the server does not need to display anything, only the client does. So you just delete all the graphic Views from the server code. You can plug a console View that shows the server load if you want. An other example: if you want to replace my PyGame 2D view by some 3D OpenGL magic, go for it. The game logic does not care one bit. This is all very well described in the two articles I linked up there.

However, I would like to add a few things in this post.

Brown’s implementation does not scale very well. It works perfectly of course and has the immense advantage of being very easy to understand, which is exactly why Brown chose this implementation for writing his tutorial. But when you look at it, 1) every Event is sent to every Listener (Model View or Controllers) ; 2) from there, a bunch of if statements will decide how the Listener will react, if it reacts at all because in many occasions the Listener doesn’t even care about that type of Event. Stone me for optimizing early, but it could be interesting to have the Listener specify which kind of events they are interested in (solving problem 1), and give to the Event Manager a direct handle to the code that should react to it, instead of filtering with ifs (solving problem 2).

Both Brown and Koonsolo apply the traditional MVC pattern which allows the View to know something about the Model. For example, the DragonSprite View really has a pointer to the DragonCharacter Model. I don’t think I’ll do that.

The Model sure ignores the View but the View knows about the model. This asymmetry feels dirty to me. It’s almost an invitation to modify the Model from the View, which is a bad thing. But even if you behave, I am still annoyed by having to carry full objects in my Events. For example, the MonsterAppearedEvent will have to carry the DragonCharacter object so that the DragonSprite be created and points to it. That complicates the serialization when you need to carry Events over the network and that makes debugging a mess. First, too many pointers and references: you don’t know who owns who, and if you use WeakRefs in the View to avoid memory leaks you’ll end up with broken pointers. Second, when you print an event into a log file it doesn’t help you at all because the CharacterMovedEvent does not tell you where the character moved ; indeed the View just has to look into its model to get the info. Third, it becomes difficult to test the View without testing the Model itself.

Does the Sprite really need to know the whole Model ? Of course not. If the Model moves, then the sprite just needs to know the new position, and this position can be stored into the CharactedMovedEvent.

One advantage I can see when one sends the full Model is that the View can initialize itself in one go. When the View is created, it knows already everything about the model and can display it properly at the right place. But we can also do it with events. You can have the CharacterCreatedEvent contain enough info for the View to initialize itself. And if it’s still not enough, the View can post a CharacterPositionRequest Event and wait for the Model to answer with a CharacterPositionEvent Event.

What I’m going for is not traditional MVC, I’ll use Events all the way. Which means that there is no real difference between a Model, a View and a Controller, at least not from an implementation point of view. They’re all Listeners, waiting for events to tickle them and posting events when they feel like it. However, what I’m not doing, is having all the Models talking to each other through events. I want to separate Model, View and Controller, I don’t want to separate the model from itself unless there’s a very good reason to do so. Can’t think of any right now.

So I’ll be working on that. I’ll tell you when it’s pushed on GitHub!